We are customer-centric and provide customized or one-stop full-stack solutions to empower all industries.

High-performance technology computing science has become the third pillar of human scientific research after theoretical science and experimental science. The development of computer technology has provided powerful tools and material foundations for the application of HPC, making it possible for people to use computing science to replace, optimize, deepen and expand the research of theoretical science and experimental science, making many theoretical research, application development and scientific experiments that were unimaginable and impossible to achieve in the past become a reality on computer systems. Many HPC application fields increasingly hope to use the new achievements of computer technology development to carry out new product design and scientific research through larger-scale and more accurate numerical simulation and digital calculation, so as to improve the level of scientific research, competitiveness and even the country’s comprehensive national strength.

New Requirements for HPC Applications

The rapid increase in computing and data volume has put forward increasingly high requirements on the computing power, system bandwidth, memory capacity, storage devices and I/O throughput of computer systems, as well as the development technology of application software. This is mainly reflected in the following three aspects:

• Computing power: In order to complete a huge amount of calculations in a short period of time, not only a processor with stronger processing power (especially high-precision floating-point computing power of 64 bits or above) is needed, but also a parallel computer system that supports a larger number of processors using system technologies such as cluster or massively parallel processing (MPP) architecture.

• Storage capacity: In order to improve performance, it is often necessary to use very large memory (VLM) technology to store the entire array in memory, which requires up to tens or even hundreds of GB of memory capacity. The increase in memory capacity obviously also requires the system to provide larger disk storage capacity

• System bandwidth: The increase in data volume has led to a sharp increase in the amount of information exchanged between the processor and memory, and between the memory and the disk. In order to quickly transmit information, sufficient system bandwidth is required to ensure that the memory can provide enough data to multiple processors in a timely manner.

Specifically, GPUs are designed to solve problems that can be expressed as data-parallel computations—programs executed in parallel on many data elements with extremely high computational density (ratio of mathematical operations to memory operations). Since all data elements execute the same program, there is less demand for precise flow control; and because it operates on many data elements and has a high computational density, memory access latency can be hidden through computation without having to use large data caches.

Data parallel processing maps data elements to parallel processing threads. Many applications that process large data sets can use data parallel programming models to accelerate computation. In 3D rendering, large sets of pixels and vertices are mapped to parallel threads. Similarly, image and media processing applications (such as post-processing of rendered images, video encoding and decoding, image scaling, stereo vision, and pattern recognition) can map image blocks and pixels to parallel processing threads. In fact, many algorithms outside the field of image rendering and processing are also accelerated by data parallel processing – from general signal processing or physical simulation to mathematical finance or mathematical biology. In the above fields, GPU computing has been successfully applied and achieved incredible acceleration results.

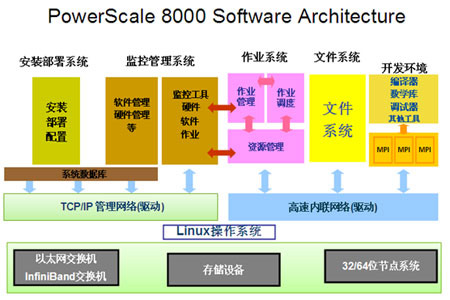

PowerLeader has also launched its own high-performance computing solution for current mainstream HPC applications: PowerLeader PowerScale 8000 cluster high-performance computer. The following figure shows the various components:

The PowerScale 8000 high-performance computer promoted by Boyd is a super parallel computer cluster system designed specifically for large-scale analytical computing. PowerScale 8000 follows Intel’s open HPC ecosystem architecture to systematically deploy all software and hardware:

Using dual-channel quad-core Xeon processors based on Intel Nehalem architecture as computing nodes, PowerScale 8000 adopts a cluster architecture, interconnected through a standard open high-speed network, runs an open source Linux system, and provides a single system interface to the outside world. It is designed for large-scale scientific parallel computing, taking into account transaction processing and network information services.

PowerScale 8000 based on the Nehalem platform will be able to take into account different types of applications, and satisfy both frequency-sensitive and memory bandwidth-sensitive applications – this was difficult to achieve before. In other words, in applications, Nehalem will be the first time that different types of high-performance computing – high-performance computing has the possibility of integrating two different applications across the ages.

The software components of a complete PowerScale 8000 high-performance computer are:

Task operation mode: The customer runs the client program on the Windows PC and then submits the computing task to the management node. The management node decomposes the computing task to each computing node according to the parameters submitted by the client. The nodes can transmit messages through the INFINIBAND switch (or optical fiber). A set of PowerScale 8000 high-performance computers that have been scientifically assembled and debugged can provide high performance, flexibility and value for simple to complex practical applications.

It can provide high floating-point computing performance, high-bandwidth and low-latency IO and network data exchange performance for demanding scientific computing.

It can meet the current business requirements and can also be upgraded and expanded to meet future business growth.

It can provide users with a simple and easy-to-use usage and maintenance interface, lowering the threshold for system usage and maintenance.

HPC experts with many years of industry experience can provide users with application-level operation and maintenance services, assist users in application tuning, identify problems, and provide professional solutions.