We Are Customer-Centric And Provide Customized Or One-Sstop Full-Stack Solutions To Empower All Industries.

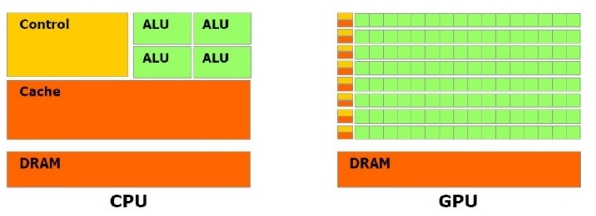

Gpu Computing Refers To Using Graphics Cards To Perform General Computing, Rather Than Traditional Graphics Drawing. Today, Gpus Have Developed Into Highly Parallel, Multi-threaded, Multi-core Processors With Outstanding Computing Power And Extremely High Memory Bandwidth. As Shown In The Figure:

Specifically, Gpus Are Designed To Solve Problems That Can Be Expressed As Data-parallel Computations—Programs Executed In Parallel On Many Data Elements With Extremely High Computational Density (Ratio Of Mathematical Operations To Memory Operations). Since All Data Elements Execute The Same Program, There Is Less Demand For Precise Flow Control; And Because It Operates On Many Data Elements And Has A High Computational Density, Memory Access Latency Can Be Hidden Through Computation Without Having To Use Large Data Caches.

Data Parallel Processing Maps Data Elements To Parallel Processing Threads. Many Applications That Process Large Data Sets Can Use Data Parallel Programming Models To Accelerate Computation. In 3d Rendering, Large Sets Of Pixels And Vertices Are Mapped To Parallel Threads. Similarly, Image And Media Processing Applications (Such As Post-processing Of Rendered Images, Video Encoding And Decoding, Image Scaling, Stereo Vision, And Pattern Recognition) Can Map Image Blocks And Pixels To Parallel Processing Threads. In Fact, Many Algorithms Outside The Field Of Image Rendering And Processing Are Also Accelerated By Data Parallel Processing – From General Signal Processing Or Physical Simulation To Mathematical Finance Or Mathematical Biology. In The Above Fields, GPU Computing Has Been Successfully Applied And Achieved Incredible Acceleration Results.

The Entire Gpu High-performance Solution Adopts A Balanced Design Of General-purpose Cpus And Dedicated Gpus, Which Not Only Guarantees The Processing Performance Of The Gpu, But Also Takes Into Account The Computing Power Of The General-purpose Cpu. It Not Only Guarantees The Needs Of High-parallel Computing Applications Suitable For Gpus, But Also Guarantees The Needs Of Non-high-parallel Applications And Applications That Have Not Yet Been Transplanted To Gpus. And Because The Gpu Has The Characteristics Of High Floating-point Computing Performance, The Solution Uses The Gpu As The Main Computing Resource, Introducing The Graphics Processor Into The Field Of High-Performance Computing.

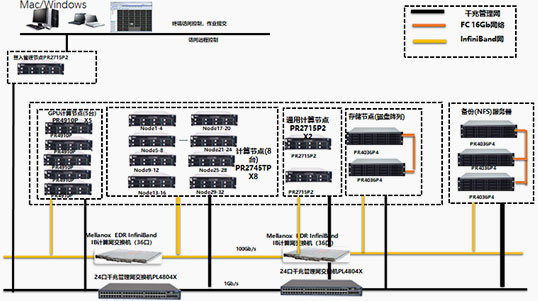

The Gpu Computing Node Uses Powerleader Pr4910p, Which Has Super High Scalability And Supports Up To 10 Full-height And Full-length Gpu Slots. It Also Supports A Variety Of Network Support, Which Can Achieve Enhanced High-speed Performance And I/o Flexibility To Meet The Interconnection Needs Of Different Applications.

The Storage Node Adopts Boyd Pr4036p4 Model, Which Has High Scalability And High Availability, Can Solve The Storage Challenges Caused By The Explosive Growth Of Data, And Supports Smart Arrays, Which Significantly Enhances I/o Performance And Data Security.

In Terms Of Network Communication, All Nodes Are Connected Through A High-speed Infiniband Network To Achieve Full Interconnection Between Nodes, Greatly Reducing The Communication Delay Between Nodes And Providing A High-bandwidth, Low-latency Io And Network Data Exchange Performance Environment For The Cluster

The System Supports Hybrid Computing Of Cpu And Gpu. The System Has A High Computing Density And Can Achieve A Computing Power Of More Than 500tflops (Single Precision). It Also Has Good Scalability And Can Be Easily Expanded To Petaflops.

Gpu Nodes, Storage Nodes, Computing Nodes, Etc. Have High Scalability, Which Can Not Only Meet The Current Business Requirements, But Also Be Upgraded And Expanded To Meet The Future Growth Of Business Volume.

Through Unified Cluster Management And Job Scheduling, Combined With Blade's High-Performance Servers, Blade Improves The Stability Of The Entire System From All Aspects, Greatly Improving User Stability While Reducing Failure Rates.